Name of Project: LEAP

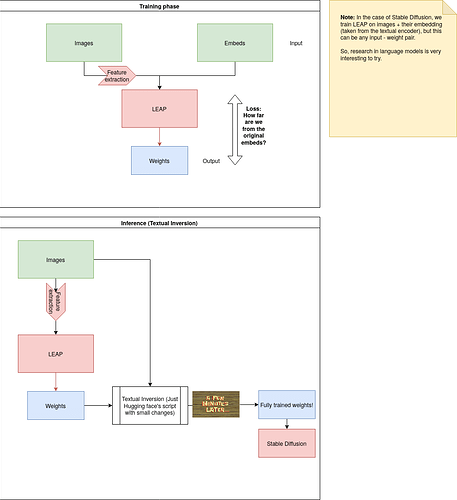

Proposal in one sentence: Fast fine-tuning for models using a local minimum as initial weights

Description of the project and what problem it is solving: Currently, we made Textual Inversion (adding your own concept to Stable Diffusion) more than 10 times faster, released in the Thingy Discord bot, released open source on GitHub as well as a standalone project on github.com/peterwilli/sd-leap-booster (remove the space after the dot because I couldn’t upload more than 2 links). The advantages of such model are immense, ranging from increasing profit margins on finetuning services or lowering the price to extending it to language models alike.

Grant Deliverables:

- Researching and releasing LEAP+Lora, integrating full pivotal tuning with dreambooth and textual inversion in one, at similar speeds, if not faster. In fact, it is already in research phase:

https://github.com/peterwilli/sd-leap-booster/tree/lora-test-3 - Research for different models i.e. language models

Squad

Squad Lead:

- Twitter: @codebuffet

- Discord: EmeraldOdin#1991

Additional notes for proposals

Thanks to a generous person lending his GPU rig, I managed to accelerate my work, and it serves as a living proof of what more could be done in the future.

Thanks for reading. Love to all, and good luck to everyone in this round and the next.