Name of Project: Prompt Extend

Proposal in one sentence: Text generation model to extend stable diffusion prompts with suitable style cues.

Description of the project and what problem it is solving:

To generate beautiful images, currently available diffusion models usually require complex prompts with additional style cues added to them.

So I’ve made this text generation model that helps with prompt engineering by expanding on the main idea of a prompt and generating suitable style cues to add to the prompt.

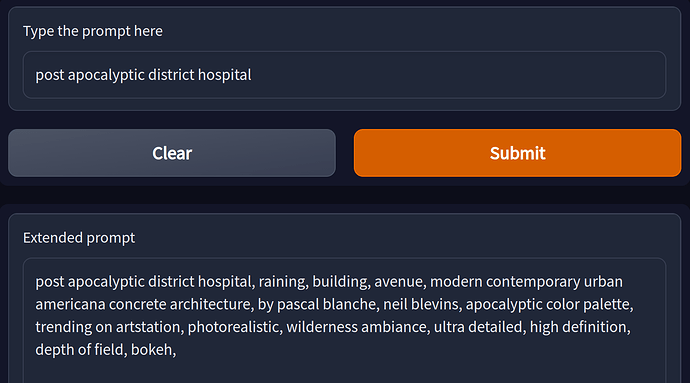

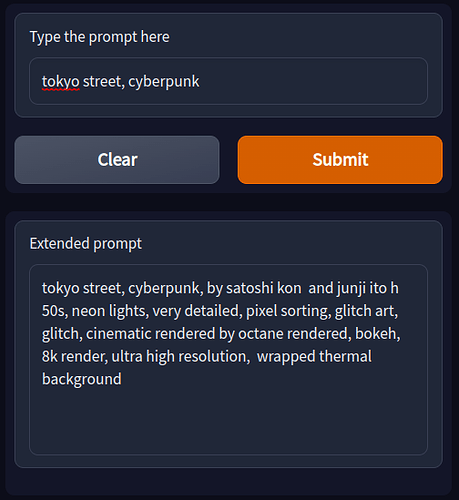

Example:

You could play with it on HuggingFace Space. Here’s the GitHub repo for the project, and I’ve also uploaded the model on HuggingFace Hub. The project is all open-source.

Grant Deliverables:

- Scaling up the model architecture and trying out different techniques for improvements.

- Experiment with fine-tuning currently available pre-trained text models on the prompts dataset and comparing their generated outputs with the current from-scratch approach (maybe try a mix of both?)

- Add this as a custom pipeline to the diffusers library so that it can be used directly with the diffusers library for image generation.

Round 7 deliverables completed:

Increased the training dataset size from 80k prompts to ~2 million prompts and made improvements to the tokenizer and the model, leading to much better and more context-aware style cues suggestions generated.

Squad

Partho Das. So far, it is a solo project.

-

Twitter handle: daspartho_

-

Discord handle: daspartho#3367

-

ETH mainnet wallet address for potential funds: 0xb70003E35ec3368c1B1BA82aa64C3687A730e107

Grants for the project will help me to develop this further.